Measurement system

Concept of Measurement system

Measurement system, any of the systems used in the process of associating numbers with physical quantities and phenomena. Although the concept of weights and measures today includes such factors as temperature, luminosity, pressure, and electric current, it once consisted of only four basic measurements: mass (weight), distance or length, area, and volume (liquid or grain measure). The last three are, of course, closely related.

Basic to the whole idea of weights and measures are the concepts of uniformity, units, and standards. Uniformity, the essence of any system of weights and measures, requires accurate, reliable standards of mass and length and agreed-on units. A unit is the name of a quantity, such as kilogram or pound. A standard is the physical embodiment of a unit, such as the platinum-iridium cylinder kept by the International Bureau of Weights and Measures at Paris as the standard kilogram.

Two types of measurement systems are distinguished historically: an evolutionary system, such as the British Imperial, which grew more or less haphazardly out of custom, and a planned system, such as the International System of Units (SI; Système Internationale d’Unités), in universal use by the world’s scientific community and by most nations.

Early units and standards

Ancient Mediterranean systems

Body measurements and common natural items probably provided the most convenient bases for early linear measurements; early weight units may have derived casually from the use of certain stones or containers or from determinations of what a person or animal could lift or haul.The historical progression of units has followed a generally westward direction, the units of the ancient empires of the Middle East finding their way, mostly as a result of trade and conquest, to the Greek and then the Roman empires, thence to Gaul and Britain via Roman expansion.

The Egyptians

Although there is evidence that many early civilizations devised standards of measurement and some tools for measuring, the Egyptian cubit is generally recognized as having been the most ubiquitous standard of linear measurement in the ancient world. Developed about 3000 bc, it was based on the length of the arm from the elbow to the extended fingertips and was standardized by a royal master cubit of black granite, against which all the cubit sticks or rules in use in Egypt were measured at regular intervals.The royal cubit (524 mm, or 20.62 inches) was subdivided in an extraordinarily complicated way. The basic subunit was the digit, doubtlessly a finger’s breadth, of which there were 28 in the royal cubit. Four digits equaled a palm, five a hand. Twelve digits, or three palms, equaled a small span. Fourteen digits, or one-half a cubit, equaled a large span. Sixteen digits, or four palms, made one t’ser. Twenty-four digits, or six palms, were a small cubit.

The digit was in turn subdivided. The 14th digit on a cubit stick was marked off into 16 equal parts. The next digit was divided into 15 parts, and so on, to the 28th digit, which was divided into 2 equal parts. Thus, measurement could be made to digit fractions with any denominator from 2 through 16. The smallest division, 1/16 of a digit, was equal to 1/448 part of a royal cubit.

The accuracy of the cubit stick is attested by the dimensions of the Great Pyramid of Giza; although thousands were employed in building it, its sides vary no more than 0.05 percent from the mean length of 230.364 metres (9,069.43 inches), which suggests the original dimensions were 440 by 440 royal cubits.

The Egyptians developed methods and instruments for measuring land at a very early date. The annual flood of the Nile River created a need for benchmarks and surveying techniques so that property boundaries could be readily reestablished when the water receded.

The Egyptian weight system appears to have been founded on a unit called the kite, with a decimal ratio, 10 kites equaling 1 deben and 10 debens equaling 1 sep. Over the long duration of Egyptian history, the weight of the kite varied from period to period, ranging all the way from 4.5 to 29.9 grams (0.16 to 1.05 ounce). Approximately 3,500 different weights have been recovered from ancient Egypt, some in basic geometric shapes, others in human and animal forms.

Egyptian liquid measures, from large to small, were ro, hin, hekat, khar, and cubic cubit.

The Babylonians

Among the earliest of all known weights is the Babylonian mina, which in one surviving form weighed about 640 grams (about 23 ounces) and in another about 978 grams (about 34 ounces). Archaeologists have also found weights of 5 minas, in the shape of a duck, and a 30-mina weight in the form of a swan. The shekel, familiar from the Bible as a standard Hebrew coin and weight, was originally Babylonian. Most of the Babylonian weights and measures, carried in commerce throughout the Middle East, were gradually adopted by other countries. The basic Babylonian unit of length was the kus (about 530 mm, or 20.9 inches), also called the Babylonian cubit. The Babylonian shusi, defined as 1/30 kus, was equal to 17.5 mm (0.69 inch). The Babylonian foot was 2/3 kus.The Babylonian liquid measure, qa (also spelled ka), was the volume of a cube of one handbreadth (about 99 to 102 millilitres, or about 6.04 to 6.23 cubic inches). The cube, however, had to contain a weight of one great mina of water. The qa was a subdivision of two other units; 300 qa equaled 60 gin or 1 gur. The gur represented a volume of almost 303 litres (80 U.S. gallons).

The Hittites, Assyrians, Phoenicians, and Hebrews derived their systems generally from the Babylonians and Egyptians. Hebrew standards were based on the relationship between the mina, the talent (the basic unit), and the shekel. The sacred mina was equal to 60 shekels, and the sacred talent to 3,000 shekels, or 50 sacred minas. The Talmudic mina equaled 25 shekels; the Talmudic talent equaled 1,500 shekels, or 60 Talmudic minas.

The volumes of the several Hebrew standards of liquid measure are not definitely known; the bat may have contained about 37 litres (nearly 10 U.S. gallons); if so, the log equaled slightly more than 0.5 litre (0.14 U.S. gallon), and the hin slightly more than 6 litres (1.6 U.S. gallons). The Hebrew system was notable for the close relationship between dry and liquid volumetric measures; the liquid kor was the same size as the dry homer, and the liquid bat corresponded to the dry ʾefa.

Greeks and Romans

In the 1st millennium bc commercial domination of the Mediterranean passed into the hands of the Greeks and then the Romans. A basic Greek unit of length was the finger (19.3 mm, or 0.76 inch); 16 fingers equaled about 30 cm (about 1 foot), and 24 fingers equaled 1 Olympic cubit. The coincidence with the Egyptian 24 digits equaling 1 small cubit suggests what is altogether probable on the basis of the commercial history of the era, that the Greeks derived their measures partly from the Egyptians and partly from the Babylonians, probably via the Phoenicians who for a long time dominated vast expanses of the Mediterranean trade. The Greeks apparently used linear standards to establish their primary liquid measure, the metrētēs, equivalent to 39.4 litres (10.4 U.S. gallons). A basic Greek unit of weight was the talent (equal to 25.8 kg, or 56.9 pounds), obviously borrowed from Eastern neighbours.Roman linear measures were based on the Roman standard foot (pes). This unit was divided into 16 digits or into 12 inches. In both cases its length was the same. Metrologists have come to differing conclusions concerning its exact length, but the currently accepted modern equivalents are 296 mm, or 11.65 inches. Expressed in terms of these equivalents, the digit (digitus), or 1/16 foot, was 18.5 mm (0.73 inch); the inch (uncia or pollicus), or 1/12 foot, was 24.67 mm (0.97 inch); and the palm (palmus), or 1/4 foot, was 74 mm (2.91 inches).

Larger linear units were always expressed in feet. The cubit (cubitum) was 11/2 feet (444 mm, or 17.48 inches). Five Roman feet made the pace (passus), equivalent to 1.48 metres, or 4.86 feet.

The most frequently used itinerary measures were the furlong or stade (stadium), the mile (mille passus), and the league (leuga). The stade consisted of 625 feet (185 metres, or 606.9 feet), or 125 paces, and was equal to one-eighth mile. The mile was 5,000 feet (1,480 metres, or 4,856 feet), or 8 stades. The league had 7,500 feet (2,220 metres, or 7,283 feet), or 1,500 paces.

Prior to the 3rd century bc the standard for all Roman weights was the as, or Old Etruscan or Oscan pound, of 4,210 grains (272.81 grams). It was divided into 12 ounces of 351 grains (22.73 grams) each. In 268 bc a new standard was created when a silver denarius was struck to a weight of 70.5 grains (4.57 grams). Six of these denarii, or “pennyweights,” were reckoned to the ounce (uncia) of 423 grains (27.41 grams), and 72 of them made the new pound (libra) of 12 ounces, or 5,076 grains (328.9 grams).

The principal Roman capacity measures were the hemina, sextarius, modius, and amphora for dry products and the quartarus, sextarius, congius, urna, and amphora for liquids. Since all of these were based on the sextarius and since no two extant sextarii are identical, a mean generally agreed upon today is 35.4 cubic inches, or nearly 1 pint (0.58 litre). The hemina, or half-sextarius, based on this mean was 17.7 cubic inches (0.29 litre). Sixteen of these sextarii made the modius of 566.4 cubic inches (9.28 litres), and 48 of them made the amphora of 1,699.2 cubic inches (27.84 litres).

In the liquid series, the quartarus, or one-fourth of a sextarius (35.4 cubic inches), was 8.85 cubic inches (0.145 litres). Six of these sextarii made the congius of 212.4 cubic inches (3.48 litres), 24 sextarii made the urna of 849.6 cubic inches (13.92 litres), and, as in dry products, 48 sextarii were equal to one amphora.

The ancient Chinese system

Completely separated from the Mediterranean-European history of metrology is that of ancient China; yet the Chinese system exhibits all the principal characteristics of the Western. It employed parts of the body as a source of units—for example, the distance from the pulse to the base of the thumb. It was fundamentally chaotic in that there was no relationship between different types of units, such as those of length and those of volume. Finally, it was rich in variations. The mou, a unit of land measure, fluctuated from region to region from 0.08 to 0.13 hectare (0.2 to 0.3 acre). Variations were not limited to the geographic; a unit of length with the same name might be of one length for a carpenter, another for a mason, and still another for a tailor. This was a problem in Western weights and measures as well.Shi Huang Di, who became the first emperor of China in 221 bc, is celebrated for, among other things, his unification of the regulations fixing the basic units. The basic weight, the shi, or dan, was fixed at about 60 kg (132 pounds); the two basic measurements, the zhi and the zhang, were set at about 25 cm (9.8 inches) and 3 metres (9.8 feet), respectively. A noteworthy characteristic of the Chinese system, and one that represented a substantial advantage over the Mediterranean systems, was its predilection for a decimal notation, as demonstrated by foot rulers from the 6th century bc. Measuring instruments, too, were of a high order.

A unique characteristic of the Chinese system was its inclusion of an acoustic dimension. A standard vessel used for measuring grain and wine was defined not only by the weight it could hold but by its pitch when struck; given a uniform shape and fixed weight, only a vessel of the proper volume would give the proper pitch. Thus the same word in old Chinese means “wine bowl,” “grain measure,” and “bell.” Measures based on the length of a pitch pipe and its subdivision in terms of millet grains supplanted the old measurements based on the human body. The change brought a substantial increase in accuracy.

Medieval systems

Medieval Europe inherited the Roman system, with its Greek, Babylonian, and Egyptian roots. It soon proliferated through daily use and language variations into a great number of national and regional variants, with elements borrowed from the Celtic, Anglo-Saxon, Germanic, Scandinavian, and Arabic influences and original contributions growing out of the needs of medieval life.A determined effort by the Holy Roman emperor Charlemagne and many other medieval kings to impose uniformity at the beginning of the 9th century was in vain; differing usages hardened. The great trade fairs, such as those in Champagne during the 12th and 13th centuries, enforced rigid uniformity on merchants of all nationalities within the fairgrounds and had some effect on standardizing differences among regions, but the variations remained. A good example is the ell, the universal measure for wool cloth, the great trading staple of the Middle Ages. The ell of Champagne, two feet six inches, measured against an iron standard in the hands of the Keeper of the Fair, was accepted by Ypres and Ghent, both in modern Belgium; by Arras, in modern France; and by the other great cloth-manufacturing cities of northwestern Europe, even though their bolts varied in length. In several other parts of Europe, the ell itself varied, however. There were hundreds of thousands of such examples among measuring units throughout Europe.

The English and United States Customary systems of weights and measures

The English system

Out of the welter of medieval weights and measures emerged several national systems, reformed and reorganized many times over the centuries; ultimately nearly all of these systems were replaced by the metric system. In Britain and in its American colonies, however, the altered medieval system survived.1The U.S. uses avoirdupois units as the common system of measuring weight.

By the time of Magna Carta (1215), abuses of weights and measures were so common that a clause was inserted in the charter to correct those on grain and wine, demanding a common measure for both. A few years later a royal ordinance entitled “Assize of Weights and Measures” defined a broad list of units and standards so successfully that it remained in force for several centuries thereafter. A standard yard, “the Iron Yard of our Lord the King,” was prescribed for the realm, divided into the traditional 3 feet, each of 12 inches, “neither more nor less.” The perch (later the rod) was defined as 5.5 yards, or 16.5 feet. The inch was subdivided for instructional purposes into 3 barley corns.

The furlong (a “furrow long”) was eventually standardized as an eighth of a mile; the acre, from an Anglo-Saxon word, as an area 4 rods wide by 40 long. There were many other units standardized during this period.

The influence of the Champagne fairs may be seen in the separate English pounds for troy weight, perhaps from Troyes, one of the principal fair cities, and avoirdupois weight, the term used at the fairs for goods that had to be weighed—sugar, salt, alum, dyes, grain. The troy pound, for weighing gold and silver bullion, and the apothecaries’ weight for drugs contained only 12 troy ounces.

A multiple of the English pound was the stone, which added a fresh element of confusion to the system by equaling neither 12 nor 16 but 14 pounds, among dozens of other pounds depending on the products involved. The sacks of raw wool, which were medieval England’s principal export, weighed 26 stones, or 364 pounds; large standards, weighing 91 pounds, or one-fourth a sack, were employed in wool weighing. The sets of standards, which were sent out from London to the provincial towns, were usually of bronze or brass. Discrepancies crept into the system, and in 1496, following a Parliamentary inquiry, new standards were made and sent out, a procedure repeated in 1588, under Queen Elizabeth I. Reissues of standards were common throughout the Middle Ages and early modern period in all European countries.

No major revision occured for nearly 200 years after Elizabeth’s time, but several refinements and redefinitions were added. Edmund Gunter, a 17th-century mathematician, conceived the idea of taking the acre’s breadth (4 perches, or 22 yards), calling it a chain, and dividing it into 100 links. In 1701 the corn bushel in dry measure was defined as “any round measure with a plain and even bottom, being 18.5 inches wide throughout and 8 inches deep.” Similarly, in 1707 the wine gallon was defined as a round measure with an even bottom and containing 231 cubic inches; however, the ale gallon was retained at 282 cubic inches. There was also a corn gallon and an older, slightly smaller wine gallon. There were many other attempts made at standardization besides these, but it was not until the 19th century that a major overhaul occurred.

The Weights and Measures Act of 1824 sought to clear away some of the medieval tangle. A single gallon was decreed, defined as the volume occupied by

10 imperial pounds weight of distilled water weighed in air against brass weights with the water and the air at a temperature of 62 degrees of Fahrenheit’s thermometer and with the barometer at 30 inches.

The same definition was reiterated in an Act of 1878, which redefined the yard:

the straight line or distance between the centres of two gold plugs or pins in the bronze bar…measured when the bar is at the temperature of sixty-two degrees of Fahrenheit’s thermometer, and when it is supported by bronze rollers placed under it in such a manner as best to avoid flexure of the bar.

Other units were standardized during this era as well. See British Imperial System.

Finally, by an act of Parliament in 1963, all the English weights and measures were redefined in terms of the metric system, with a national changeover beginning two years later.

The United States Customary System

In his first message to Congress in 1790, George Washington drew attention to the need for “uniformity in currency, weights and measures.” Currency was settled in a decimal form, but the vast inertia of the English weights and measures system permeating industry and commerce and involving containers, measures, tools, and machines, as well as popular psychology, prevented the same approach from succeeding, though it was advocated by Thomas Jefferson. In these very years the metric system was coming into being in France, and in 1821 Secretary of State John Quincy Adams, in a famous report to Congress, called the metric system “worthy of acceptance…beyond a question.” Yet Adams admitted the impossibility of winning acceptance for it in the United States, until a future timewhen the example of its benefits, long and practically enjoyed, shall acquire that ascendancy over the opinions of other nations which gives motion to the springs and direction to the wheels of the power.

Instead of adopting metric units, the United States tried to bring its system into closer harmony with the English, from which various deviations had developed; for example, the United States still used “Queen Anne’s gallon” of 231 cubic inches, which the British had discarded in 1824. Construction of standards was undertaken by the Office of Standard Weights and Measures, under the Treasury Department. The standard for the yard was one imported from London some years earlier, which guaranteed a close identity between the American and English yard; but Queen Anne’s gallon was retained. The avoirdupois pound, at 7,000 grains, exactly corresponded with the British, as did the troy pound at 5,760 grains; however, the U.S. bushel, at 2,150.42 cubic inches, again deviated from the British. The U.S. bushel was derived from the “Winchester bushel,” a surviving standard dating to the 15th century, which had been replaced in the British Act of 1824. It might be said that the U.S. gallon and bushel, smaller by about 17 percent and 3 percent, respectively, than the British, remain more truly medieval than their British counterparts.

At least the standards were fixed, however. From the mid-19th century, new states, as they were admitted to the union, were presented with sets of standards. Late in the century, pressure grew to enlarge the role of the Office of Standard Weights and Measures, which, by Act of Congress effective July 1, 1901, became the National Bureau of Standards (since 1988 the National Institute of Standards and Technology), part of the Commerce Department. Its functions, as defined by the Act of 1901, included, besides the construction of physical standards and cooperation in establishment of standard practices, such activities as developing methods for testing materials and structures; carrying out research in engineering, physical science, and mathematics; and compilation and publication of general scientific and technical data. One of the first acts of the bureau was to sponsor a national conference on weights and measures to coordinate standards among the states; one of the main functions of the annual conference became the updating of a model state law on weights and measures, which resulted in virtual uniformity in legislation.

Apart from this action, however, the U.S. government remained unique among major nations in refraining from exercising control at the national level. One noteworthy exception was the Metric Act of 1866, which permitted use of the metric system in the United States.

The metric system of measurement

The development and establishment of the metric system

One of the most significant results of the French Revolution was the establishment of the metric system of weights and measures.European scientists had for many years discussed the desirability of a new, rational, and uniform system to replace the national and regional variants that made scientific and commercial communication difficult. The first proposal closely to approximate what eventually became the metric system was made as early as 1670. Gabriel Mouton, the vicar of St. Paul’s Church in Lyon, France, and a noted mathematician and astronomer, suggested a linear measure based on the arc of one minute of longitude, to be subdivided decimally. Mouton’s proposal contained three of the major characteristics of the metric system: decimalization, rational prefixes, and the Earth’s measurement as basis for a definition. Mouton’s proposal was discussed, amended, criticized, and advocated for 120 years before the fall of the Bastille and the creation of the National Assembly made it a political possibility. In April of 1790 one of the foremost members of the assembly, Charles-Maurice de Talleyrand, introduced the subject and launched a debate that resulted in a directive to the French Academy of Sciences to prepare a report. After several months’ study, the academy recommended that the length of the meridian passing through Paris be determined from the North Pole to the Equator, that 1/10,000,000 of this distance be termed the metre and form the basis of a new decimal linear system, and, further, that a new unit of weight should be derived from the weight of a cubic metre of water. A list of prefixes for decimal multiples and submultiples was proposed. The National Assembly endorsed the report and directed that the necessary meridional measurements be taken.

On June 19, 1791, a committee of 12 mathematicians, geodesists, and physicists met with King Louis XVI, who gave his formal approval. The next day, the king attempted to escape from France, was arrested, returned to Paris, and was imprisoned; a year later, from his cell, he issued the proclamation that directed several scientists including Jean Delambre and Pierre Mechain to perform the operations necessary to determine the length of the metre. The intervening time had been spent by the scientists and engineers in preliminary research; Delambre and Mechain now set to work to measure the distance on the meridian from Barcelona, Spain, to Dunkirk in northern France. The survey proved arduous; civil and foreign war so hampered the operation that it was not completed for six years. While Delambre and Mechain were struggling in the field, administrative details were being worked out in Paris. In 1793 a provisional metre was constructed from geodetic data already available. In 1795 the firm decision was taken to enact adoption of the metric system for France. The new law defined the length, mass, and capacity standards and listed the prefixes for multiples and submultiples. With the formal presentation to the assembly of the standard metre, as determined by Delambre and Mechain, the metric system became a fact in June 1799. The motto adopted for the new system was “For all people, for all time.”

The standard metre was the Delambre-Mechain survey-derived “one ten-millionth part of a meridional quadrant of the earth.” The gram, the basic unit of mass, was made equal to the mass of a cubic centimetre of pure water at the temperature of its maximum density (4 °C or 39.2 °F). A platinum cylinder known as the Kilogram of the Archives was declared the standard for 1,000 grams.

The litre was defined as the volume equivalent to the volume of a cube, each side of which had a length of 1 decimetre, or 10 centimetres.

The are was defined as the measure of area equal to a square 10 metres on a side. In practice the multiple hectare, 100 ares, became the principal unit of land measure.

The stere was defined as the unit of volume, equal to one cubic metre.

Names for multiples and submultiples of all units were made uniform, based on Greek and Latin prefixes.

The metric system’s conquest of Europe was facilitated by the military successes of the French Revolution and Napoleon, but it required a long period of time to overcome the inertia of customary systems. Even in France Napoleon found it expedient to issue a decree permitting use of the old medieval system. Nonetheless, in the competition between the two systems existing side by side, the advantages of metrics proved decisive; in 1840 it was established as the legal monopoly in France, and from that point forward its progress throughout the world has been steady, though it is worth observing that in many cases the metric system was adopted during the course of a political upheaval, just as in its original French beginning. Notable examples are Latin America, the Soviet Union, and China. In Japan the adoption of the metric system came about following the peaceful but far-reaching political changes associated with the Meiji Restoration of 1868.

In Britain, the Commonwealth nations, and the United States, the progress of the metric system has been discernible. The United States became a signatory to the Metric Convention of 1875 and received copies of the International Prototype Metre and the International Prototype Kilogram in 1890. Three years later the Office of Weights and Measures announced that the prototype metre and kilogram would be regarded as fundamental standards from which the customary units, the yard and the pound, would be derived.

Throughout the 20th century, use of the metric system in various segments of commerce and industry increased spontaneously in Britain and the United States; it became almost universally employed in the scientific and medical professions. The automobile, electronics, chemical, and electric power industries have all adopted metrics at least in part, as have such fields as optometry and photography. Legislative proposals to adopt metrics generally have been made in the U.S. Congress and British Parliament. In 1968 the former passed legislation calling for a program of investigation, research, and survey to determine the impact on the United States of increasing worldwide use of the metric system. The program concluded with a report to Congress in July 1971 that stated:

On the basis of evidence marshalled in the U.S. metric study, this report (D.V. Simone, “Metric America, A Decision whose Time has Come,” National Bureau of Standards Special Publication 345) recommends that the United States change to the International Metric System.

Parliament went further and established a long-range program of changeover.

The International System of Units

Just as the original conception of the metric system had grown out of the problems scientists encountered in dealing with the medieval system, so a new system grew out of the problems a vastly enlarged scientific community faced in the proliferation of subsystems improvised to serve particular disciplines. At the same time, it had long been known that the original 18th-century standards were not accurate to the degree demanded by 20th-century scientific operations; new definitions were required. After lengthy discussion the 11th General Conference on Weights and Measures (11th CGPM), meeting in Paris in October 1960, formulated a new International System of Units (abbreviated SI). The SI was amended by subsequent convocations of the CGPM. The following base units have been adopted and defined:Length: metre

Since 1983 the metre has been defined as the distance traveled by light in a vacuum in 1/299,792,458 second.Mass: kilogram

The standard for the unit of mass, the kilogram, is a cylinder of platinum-iridium alloy kept by the International Bureau of Weights and Measures, located in Sèvres, near Paris. A duplicate in the custody of the National Institute of Standards and Technology serves as the mass standard for the United States.The kilogram is the only base unit still defined by an artifact. However, in 1989 it was discovered that the prototype kept at Sèvres was 50 micrograms lighter than other copies of the standard kilogram. To avoid the problem of having the kilogram defined by an object with a changing mass, the CGPM in 2011 agreed to a proposal to begin to redefine the kilogram not by a physical artifact but by a fundamental physical constant. The constant chosen was Planck’s constant, which was defined to be equal to 6.6260693 × 10−34 joule second. One joule is equal to one kilogram times metre squared per second squared. Since the second and the metre were already defined in terms of the frequency of a spectral line of cesium and the speed of light, respectively, the kilogram would then be determined by accurate measurements of Planck’s constant.

Time: second

The second is defined as the duration of 9,192,631,770 cycles of the radiation associated with a specified transition, or change in energy level, of the cesium-133 atom.Electric current: ampere

The ampere is defined as the magnitude of the current that, when flowing through each of two long parallel wires separated by one metre in free space, results in a force between the two wires (due to their magnetic fields) of 2 × 10−7 newton (the newton is a unit of force equal to about 0.2 pound) for each metre of length. However, in 2011 the CGPM agreed to a proposal to begin to redefine the ampere such that the elementary charge was equal to 1.60217653 × 10−19 coulomb.Thermodynamic temperature: kelvin

The thermodynamic, or Kelvin, scale of temperature used in SI has its origin or zero point at absolute zero and has a fixed point at the triple point of water (the temperature and pressure at which ice, liquid water, and water vapour are in equilibrium), defined as 273.16 kelvins. The Celsius temperature scale is derived from the Kelvin scale. The triple point is defined as 0.01 degree on the Celsius scale, which is approximately 32.02 degrees on the Fahrenheit temperature scale. However, in 2011 the CGPM agreed to a proposal to begin to redefine the kelvin such that Boltzmann’s constant was equal to 1.3806505 × 10−23 joule per kelvin.Amount of substance: mole

The mole is defined as the amount of substance containing the same number of chemical units (atoms, molecules, ions, electrons, or other specified entities or groups of entities) as exactly 12 grams of carbon-12. However, in 2011 the CGPM agreed to a proposal to begin to redefine the mole such that the Avogadro constant was equal to 6.0221415 × 1023 per mole.Light (luminous) intensity: candela

The candela is defined as the luminous intensity in a given direction of a source that emits monochromatic radiation at a frequency of 540 × 1012 hertz and that has a radiant intensity in the same direction of 1/683 watt per steradian (unit solid angle).Widely used units in the SI system

A list of the widely used units in the SI system is provided in the table.Prefixes and units used in the metric system

Prefixes and units used in the metric system are provided in the table.*The metric system of bases and prefixes has been applied to many other units, such as decibel (0.1 bel), kilowatt (1,000 watts), megahertz (1,000,000 hertz), and microhm (one-millionth of an ohm).

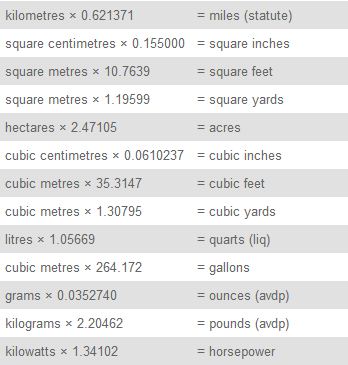

Metric conversions

A list of metric conversions is provided in the table.*Exact.

**Common term not used in SI.

Source: National Bureau of Standards Wall Chart.

Lawrence James Chisholm

Measurement system In Encyclopædia Britannica. Retrieved from

http://www.britannica.com/EBchecked/topic/1286365/measurement-system